NYC Streaming Meetup, Tuesday March 18th, No RSVP Needed

Sponsored by

My live blog of the Super Bowl stream is up. Click here to jump to the section with the updates. I’ll compare the video quality, latency and other details from Tubi across Fire TV, Apple TV, Roku, LG and Samsung TVs, iPhones, iPads and MacBooks. I’ll also check the streams on YouTube TV, Sling TV, Hulu+ Live TV, DIRECTV Stream, and Fubo. Below are some confirmed Super Bowl streaming technical specs and previous viewership numbers. All my blog posts on testing previous Super Bowl streams can be found at www.superbowlstreaming.com.

[And that’s a wrap! While it will be a few days before we have detailed viewership numbers, FOX Sports and Tubi delivered the highest quality Super Bowl stream ever with the largest viewership measured in peak concurrent users.]

February 11th: The final and updated Tubi Super Bowl viewership numbers are out. Tubi peaked at 15.5 million concurrent devices and had an AMA of 13.6 million. Across all game day programming, Tubi reached 24 million unique viewers. Viewership on Telemundo and NFL Digital properties added another 900,000 AMA.

Note: I am under NDA with FOX, having flown out to their Media Center in Tempe last year to see their workflow and setup. There are some specific details of the stream I can’t share without their permission, and I thank them for the insight and access they are providing me.

The FOX media team is one of the most experienced in the industry in streaming large-scale live events. In 2023, FOX used K6 to replicate traffic, testing up to 100m RPS, and they know how to test and scale video services. I expect the Super Bowl stream to be flawless, and the only new element added this year is playback in Tubi instead of the FOX Sports app, which the company has been load-testing leading up to the event. In the 24 hours before the Super Bowl, the Tubi app is loading in under 2 seconds for me on Fire TV and Apple TV devices.

Tubi’s player will look slightly different for the Super Bowl stream. Some regular player features have been removed, so the refreshed layout focuses on sport-specific content. Tubi says all the original Tubi features will return to the player after the Super Bowl. Tubi will not have the game available for replay; it will be live only with no VOD archive. The live stream will be in English, with no translations, but it is available in Spanish on FOX Deportes and Telemundo. Closed captioning will only be available in English.

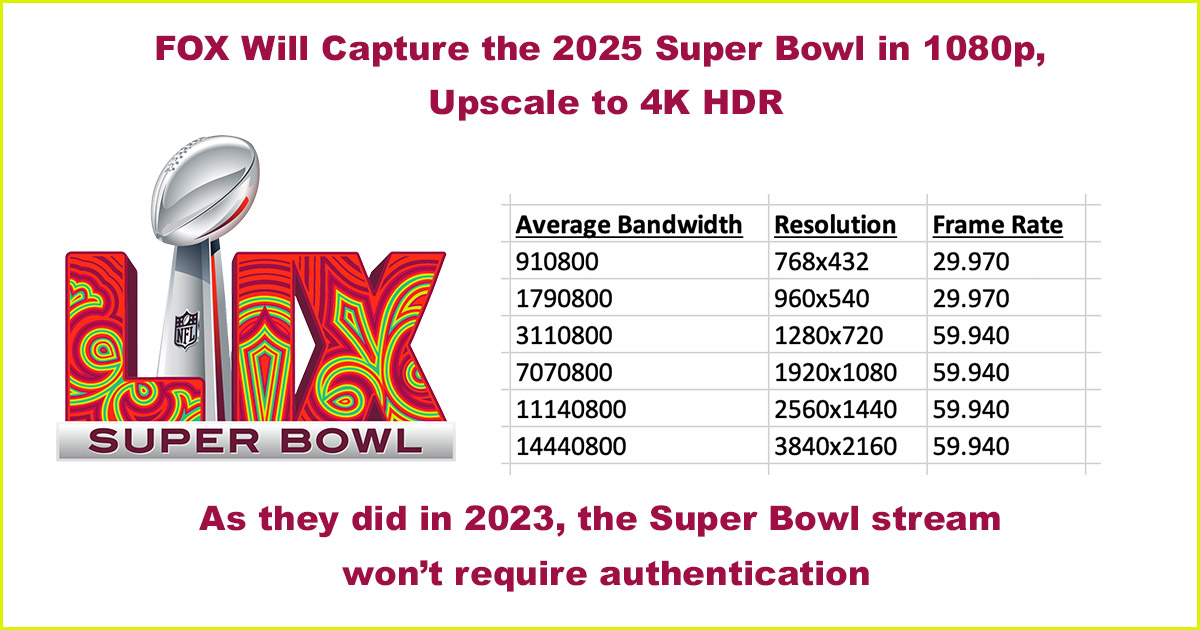

FOX will capture the game in 1080p HDR, upscale it to 4K (as they did in 2020 & 2023), and digitally distribute it to vMVPDs and Tubi. To see the game via Tubi, viewers must sign up with an email/pass or authenticate via an Amazon account on the Fire TV platform. To log in using your Tubi account details, you must first hit the cancel button, which sends you to a screen allowing you to log in via a QR or activation code. If you are using the Tubi app as a guest, you won’t be able to see the stream. Viewers cannot stream the game via the FOX Sports app and will be redirected to Tubi.

To view Tubi on smart TVs, you must have LG webOS 5+ or a Samsung model from 2018 or newer. For LG TV’s running webOS 4, you can use AirPlay to the TV. The maximum bitrate on Tubi will be 14.4Mbps; you can see details here on FOX’s encoding bitrate ladder. The Super Bowl tailgate concert by Post Malone is not on Tubi and will exclusively live stream on the NFL’s YouTube channel since YouTube is the official sponsor of the pregame party.

The 2024 Super Bowl stream on Paramount+ had an average minute audience of 8.5 million and required users to authenticate. However, they were offering a free trial to Paramount+ during the Super Bowl, so technically, anyone could watch the event for free. Over the last three years, viewers of the Super Bowl stream across Paramount, NBC Sports and FOX averaged 15% growth yearly.

For stream testing, I’m using multiple Roku’s (4800, 3820, 3820CA2), Apple TV 4K (A2843), Amazon Fire TV Stick 4K (M3N6RA), Amazon Fire TV Stick 4K Max (K3R6AT ), DIRECTV Gemini (P21KW-500), two LG OLEDs (55C9AUA/65BXPUA) and two Samsung TVs (UN40F5500AF/QN65S90CAFXZA). This is in addition to three iPads and two iPhones (Verizon). All TVs and streaming devices are connected via ethernet, and my ISPs are Optimum and Verizon. I am also collecting OTA data from local users in Kansas City for latency testing.

Last summer, I visited FOX’s new $200 million Media Center in Tempe, Arizona, the streaming and technology hub for the Company, and it was the most impressive facility I’ve ever seen. FOX’s Linear distribution, streaming and VOD distribution services originate in the building for acquisition, encoding, transcoding, editing, closed captioning, archiving, storage and distribution. Part of the AWS backbone runs through Tempe, and FOX’s IP network connects directly to four AWS transit centers across the country. Fox seamlessly operates between on-prem hardware and cloud-based infrastructure in Tempe and Los Angeles with full backup capability within the AWS cloud. The media center handles more than 50,000 live events a year.

FOX’s distribution workflow has four CDN vendors in the mix: Akamai, Fastly, Amazon CloudFront and Qwilt. Note that just because four CDNs are setup does not mean they will all get traffic or the same traffic volume. For example, in 2024, Paramount had four CDNs in the mix, but Akamai and CloudFront got most of the traffic. Plenty of extra capacity is provisioned, but it doesn’t always get used.

FOX is capturing the game in 1080p HDR, upscaling it to 4K (as they did in 2020 & 2023), and digitally distributing it to vMVPDs and Tubi. The maximum bitrate on Tubi will be 14.4Mbps, and all streams are in HLS with two-second chunks. A few news outlets are reporting that Tubi is selling local ads within the stream, which is inaccurate. No DAI is being used and all ads are burned in.

On February 6th, the U.S. Bankruptcy Court approved the sale of select Edgio assets to Parler for $7.5 million. Parler plans to hire 120 former Edgio employees. The company acquired assets in approximately 25 global locations out of the roughly 160 locations in Edgio’s footprint. I will provide more details on the assets acquired and what Parler will use them for soon. The sale of non-overlapping Edgio assets to Encore Technologies for $2.5M was also approved.

At 9am MT on January 16th, the Edgecast and Limelight Networks will go dark. I saw an employee comment that their career at LLNW and Edgecast was “wasted,” but that is NOT the case. The combination of Limelight Networks, Edgecast as a standalone company before it was acquired, VDMS post the Edgecast acquisition, and finally, Edgio, combining it all, employed thousands of employees over 23 years. Combined, they delivered what I would guess to be tens of billions of streams and helped support some of the most significant streaming events at that moment in time. Most importantly, it allowed many to test, trial, and fine-tune new delivery technologies and figure out how to do it at scale.

What many employees learned from being in the trenches, figuring out how to solve complex problems and supporting customer needs allowed employees to advance their careers in the CDN industry. Many have applied that knowledge to other companies they now work for throughout many different industries, not just video. Don’t allow Edgio’s failure as a company to make you second guess what you know and the experience you have gained. In life, good people give you happiness. The worst people give you a lesson. And the best people give you memories. It’s the same in business. For those who worked for any of the Limelight/Edgecast/VDMS/Edgio companies, you gained friends and, hopefully, learned much about business principles.

While many will want to lay blame for what happened with Edgio, that time has passed. Much, but not all, of Limelight’s management team from 2020 and before, pre-the Edgecast acquisition and rebranding to Edgio, set the company on a path they could not recover from. They made terrible decisions because they didn’t listen to customers, didn’t watch their P&L, didn’t understand the competitive landscape and had egos that were out of touch with reality. New management had no chance to fix what was broken and was set up for failure. Edgio won’t be the last CDN to shut down; by my count, they were about the 25th CDN vendor to be shut down over the past three decades. (see cdnlist.com)

If you are heading to the NAB Show in April, I will give you a free pass for the Streaming Summit; please contact me if you’re interested. Also, next month, I will host a Zoom for anyone looking for tips and tricks on advancing your career in the industry or finding a new job, so follow me on LinkedIn for details soon.

For those looking for new jobs, remember how an elite leader in any domain thinks when it’s time for change. Building a skill takes time and effort, and I will invest the time and be tenaciously persistent. I understand that discomfort is part of the process. If I avoid discomfort, I prevent learning. That’s the mindset of someone who is getting better every day. Educate yourself on what you need to know to get the next job. The financial currency in the business world is information. It’s leverage. Change is inevitable, but progress is optional. Stream on.

FOX shared with me its encoding bitrate ladder for the Super Bowl, with the max bitrate topping out at close to 15Mbps. FOX will capture the game in 1080p, upscale it to 4K HDR, and digitally distribute it to vMVPDs and FOX’s platform. The 2024 Super Bowl stream on Paramount had an average minute audience of 8.5 million and required users to authenticate. As they did in 2023, FOX’s 2025 Super Bowl stream won’t require authentication on FOX’s platform. Over the last three years, viewers of the Super Bowl stream across Paramount, NBC Sports and FOX averaged 15% growth yearly. This blog post details the viewership numbers for the previous Super Bowl streams from 2012-2024.

Amazon’s exclusive NFL AFC Wild Card game is live on Prime, and the company tells me they are seeing record viewership. On Twitter, some viewers reported varied audio and video quality issues, which is expected for an event with a large viewership and a larger number of older devices. Amazon says they do not see any major viewing issues across their audience.

The stream latency on my Fire TV devices averages six seconds compared to Baltimore’s ABC broadcast TV feed. The Prime app on LG TV averages eight seconds. The stream on my MacBook is 30+ seconds behind the stream on Fire TV devices, which isn’t surprising for a video being played in the browser.

On my Fire TV Max devices, the stream took slightly under 14 seconds to start, but testing again later in the game, the time to first frame (TTFF) was down to 3 seconds. On my iPad and iPhone, I experienced no quality issues. Amazon’s goal for live events is to have latency under 10 seconds, which I’ve seen on all devices outside the desktop. I understand that Amazon’s Sye tech isn’t used for delivering streams to desktop computers, so a higher latency is expected. Testing pirated streams, latency averages more than 90 seconds behind Amazon’s stream.

Speaking of Sye, I’m playing the game from three Fire TV Max devices on three TVs, and the frame sync on all of them is perfect. I’ve confirmed that all three are getting Sye streams, which would explain the great experience. Comments on others’ streaming experiences can be seen on this LinkedIn post.

Final viewership numbers are out for the NFL’s Christmas day games, with an AMA of 31.3 million global viewership for the Ravens-Texans game, with 24.3 million coming from the US. The number includes Netflix’s stream, CBS local market viewing and NFL+ mobile viewing from NFL. While many will want to compare these numbers to the streaming of previous NFL games, it’s hard to make a fair comparison.

The numbers reported take Nielsen’s Live Streaming Measurement service and Netflix’s first-party streaming data to measure viewership. AMA viewership figures are based on National Live + SD from Nielsen in the US, which includes out-of-home viewing, CBS local market viewing, NFL+, and mobile and web viewing across Netflix. International data is based on 1st party Netflix Live + 1 data for TV, mobile and web, along with NFL reported viewing for the NFL’s international distributors and NFL Game Pass outside of the US.

You can see my post here that lists “The Largest Live Streaming Events in History and How They Are Measured.” I see some comparing this to the Super Bowl, but from a streaming standpoint, the Super Bowl’s AMA viewership is much smaller. You can see a breakdown of those numbers here, “Thirteen Years of Super Bowl Streaming Viewership Stats, 2012-2024.”