New Report Reveals that Ad Blocking is Pervasive Amongst Millennials Who Choose Illegal Streaming Over Linear Television

Millennials are watching video content, but in most cases it’s on-demand video, not live TV. This is one of the reasons why, when content aggregators build television channels for them, they don’t show up. A truism that eventually shuttered Pivot and explains why Vice’s average viewer is 40 years old and their ratings aren’t quite as strong as H2, the channel they replaced.

While many studies have explored millennials’ clear preference for streaming content over linear TV, Anatomy just released a report that takes a closer look at how young millennials (18-24) are viewing their video content and they specifically explored if they pay for what they stream with their data, dollars or demographics. They surveyed over 2,500 young millennials to get some hard data around their behaviors and opinions. Anatomy looked at this subset of the millennial population because as Anatomy’s CEO Gabriella Mirabelli told me, “we feel that this cohort is the engine behind the disruptive behaviors that will be rocking the media landscape down the road. As this population ages they don’t adopt regressive technology, but rather propagate their behaviors up and down the demographic spectrum.”

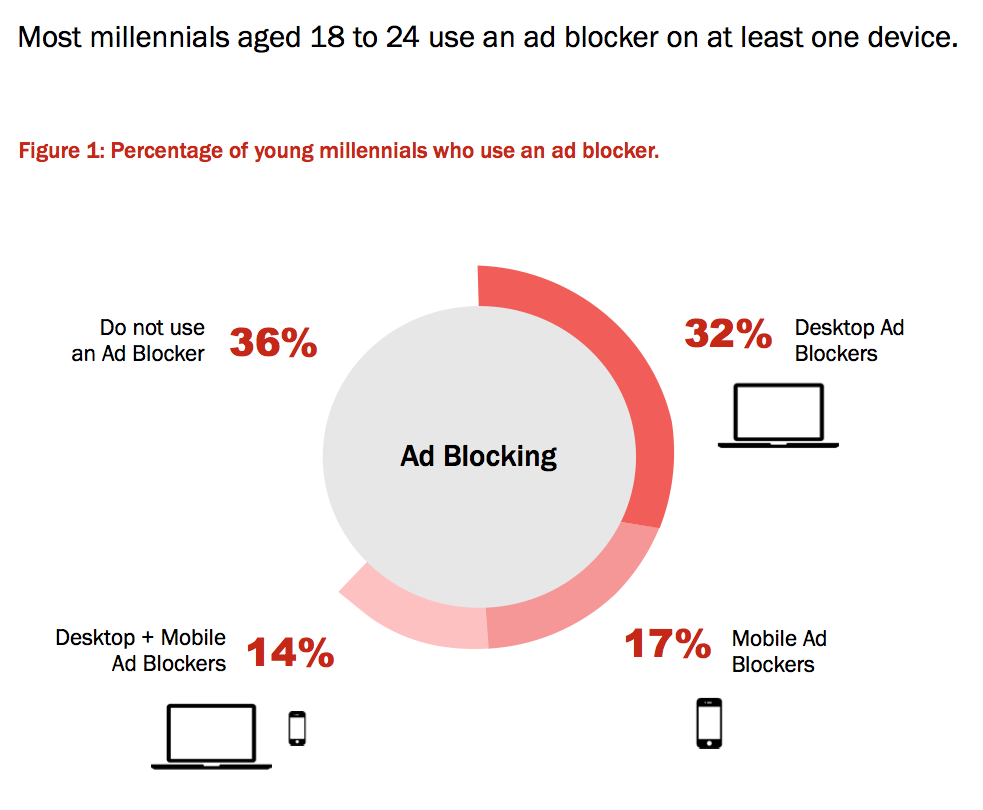

Anatomy’s Millennials at the Gate report found that young millennials represent the bloodiest cutting edge of ad block adoption. In fact, two out of three young millennials use an ad blocker. Why do they do it? 64% say it’s to avoid intrusive video ads. They also want to speed up their browsing and increase their privacy. In a nutshell, they do it to improve their viewing experience.

Gabriella Mirabelli, Anatomy’s CEO, notes, “This isn’t particularly surprising because the mantra for those looking to monetize content is create a premium user experience, or lose viewers. While much of this is common knowledge, it’s surprising that the most established video publishers aren’t doing anything about it.” An ad block wall is a website feature that detects ad blocker software and prevents a user from accessing site content until the ad block software is disabled. Of the 17 broadcast networks Anatomy surveyed; only one (CBS) employed an ad block wall. This failure by content owners means lost revenue, plain and simple.

Gabriella Mirabelli, Anatomy’s CEO, notes, “This isn’t particularly surprising because the mantra for those looking to monetize content is create a premium user experience, or lose viewers. While much of this is common knowledge, it’s surprising that the most established video publishers aren’t doing anything about it.” An ad block wall is a website feature that detects ad blocker software and prevents a user from accessing site content until the ad block software is disabled. Of the 17 broadcast networks Anatomy surveyed; only one (CBS) employed an ad block wall. This failure by content owners means lost revenue, plain and simple.

After the Olympics, some articles suggested that NBC’s online viewership may have cut into their linear ad revenue. Other articles laid the blame squarely at the door of millennials who, they complained, didn’t show up. Well, maybe they did and maybe they didn’t. But of the young millennials who did show up, we know that two-thirds had their ad blockers on, so the ad content was stripped out and the potential revenue never obtained.

Of course NBC is not alone. Other than CBS, none of the networks tested had an ad block wall in place. And, it’s worth noting, CBS affiliates didn’t benefit from their network’s best practice and there’s really no excuse for that. Of course, it might be that the networks are aware that if they put in an ad block wall, they will need to simultaneously monitor and manage the viewer’s streaming ad experience. Nothing will be more damaging to their brand or detrimental to the user experience than to force feed viewers ads that are irrelevant and repetitive and yet I have been complaining about this exact thing for years.

With two NFL games under Twitter’s belt now, I’m reading far too many headlines hyping what it means for Twitter to be in the live streaming business. Phrases like “game changing,” “milestone,” and “make-or-break moment” have all been used to describe Twitter’s NFL stream. Many commenting about the stream act as if live video is a new kind of new technology breakthrough, with some even suggesting that “soon all NFL games will be broadcast this way.” While many want to talk about the quality of the stream, no one is talking about the user interface experience or the fact that Twitter can’t cover their licensing costs via any advertising model. What Twitter is doing with the NFL games is interesting, but it lacks engagement and is a loss leader for the company. There is nothing “game changing” about it.

With two NFL games under Twitter’s belt now, I’m reading far too many headlines hyping what it means for Twitter to be in the live streaming business. Phrases like “game changing,” “milestone,” and “make-or-break moment” have all been used to describe Twitter’s NFL stream. Many commenting about the stream act as if live video is a new kind of new technology breakthrough, with some even suggesting that “soon all NFL games will be broadcast this way.” While many want to talk about the quality of the stream, no one is talking about the user interface experience or the fact that Twitter can’t cover their licensing costs via any advertising model. What Twitter is doing with the NFL games is interesting, but it lacks engagement and is a loss leader for the company. There is nothing “game changing” about it.