Reviewing Fastly’s New Approach To Load Balancing In The Cloud

Load balancing in the cloud is nothing new. Akamai, Neustar, Dyn, and AWS have been offering DNS-based cloud load balancing for a long time. This cloud based approach has many benefits over more traditional appliance-based solutions, but there are still a number of shortcomings. Fastly’s recently released load balancer product takes an interesting new approach, which the company says actually addresses many of the original challenges.

But before delving into the merits of cloud-based load balancing, let’s take a quick look at the more traditional approach. The global and local load balancing market has long been dominated by appliance vendors like F5, Citrix, A10, and others. While addressing key technology requirements, appliances have several weaknesses. First, they are costly to maintain. You need specialized IT staff to configure and manage them, not to mention the extra space and power they take up in your datacenter. Then there are the high support costs, sometimes as much as 20-25% of the total yearly cost of the hardware. Appliance-based load balancers are also notoriously difficult to scale. You can’t just “turn on another appliance” based on flash traffic or a sudden surge in the popularity of your website. Finally, these solutions don’t fit into the growing cloud model which requires you to be able to load balance within and between Amazon’s AWS, Google Cloud or Microsoft Azure.

Cloud load balancers address the shortcomings of appliance-based solutions. However, the fact that they are built on top of DNS creates some new challenges. Let’s consider an example of a user who wants to connect to www.example.com. A DNS query is generated, and the DNS-based load balancing solution decides what region/location to send the query based on a few variables. The browser/end-user caches that information typically for a minute or more. There are two key problems with this approach, the DNS time-to-live/caching, and the minimal number of variables that the load balancer can use to make the optimal decision.

The first major flaw with this approach is the fact that DNS-based load balancing is dependent upon a mechanism that was designed to help with the performance of DNS. DNS has a built-in performance mechanism where the answer returned from a DNS question can be cached for a time period specified by the server. This is called the Time to Live or TTL, and usually the lowest value most sites use is between 30-60 seconds. However, most browsers have implemented their own caching layer that can override the TTL specified by the server. In fact, some browsers cache for 5-10 minutes, which is an eternity when a region or data center fails and you need to route end users to a different location. Granted, modern browsers have improved their response time as it relates to TTL, but there are a ton of older browsers and various libraries that still hold on to cached DNS responses for 10+ minutes.

The second major flaw with DNS-based load balancing solutions is that the load balancing provider can only make a decision based on the recursive IP of the querying DNS server, or less frequently (if the provider supports it), the end-user IP. Most frequently, DNS-based solutions receive a DNS query for www.example.com and the load balancer looks at the IP address of the querying system, which is generally just the end user’s DNS resolver and is often not even in the same geography. The DNS-based load balancer has to make decisions based solely on this input. It doesn’t know anything about the specific request itself – e.g. the path requested, the type of content it is, whether the user is logged in or not, the particular cookie or header values, etc. It only sees the querying IP address and the hostname which severely limits its ability to make the best possible decision.

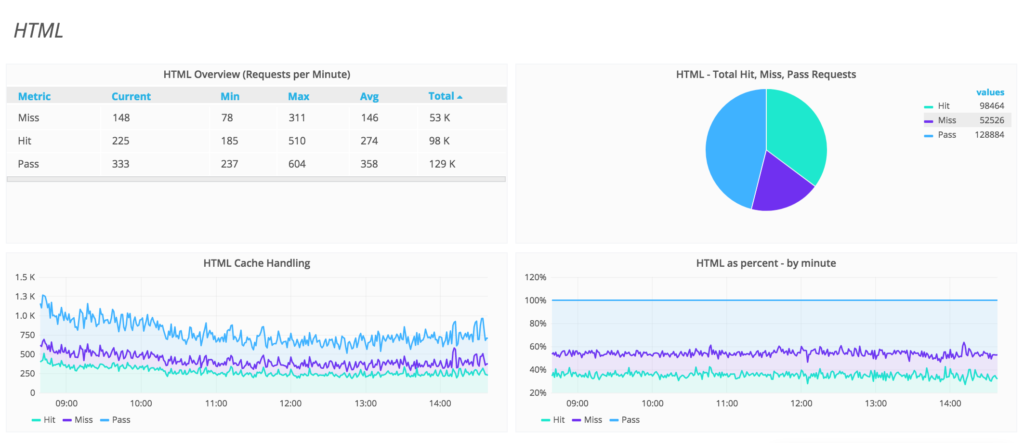

Fastly’s says their new application-aware load balancer is built-in such a way that it avoids these problems. It’s basically a SaaS service built on top of their 10+ Tbps platform, which already provides CDN, DDoS protection, and web application firewall (WAF). Fastly’s load balancer makes all of its load balancing decisions at the HTTP/HTTPS layer, so it can make application-specific decisions on every request, overcoming the two major flaws of the DNS-based solutions. Fastly also provides granular control, including the ability to make different load balancing decisions and ratios based on cookie values, headers, whether a user is logged in (and if they are a premium customer), what country they come from, etc. Decisions are also made on every request to the customer’s site or API, not just when the DNS cache expires. This allows for sub-second failover to a backup site if the primary is unavailable.

The other main difference is that Fastly’s Load Balancer, like the rest of their services, is developed on their single edge cloud platform, allowing customers to take advantage of all the benefits of this platform. For example, they can create a proactive feedback loop with real-time streaming logs to identify issues faster and instant configuration changes to address these issues. You can see more about what Fastly is doing with load balancing by checking out their recent video presentation from the CDN Summit last month.

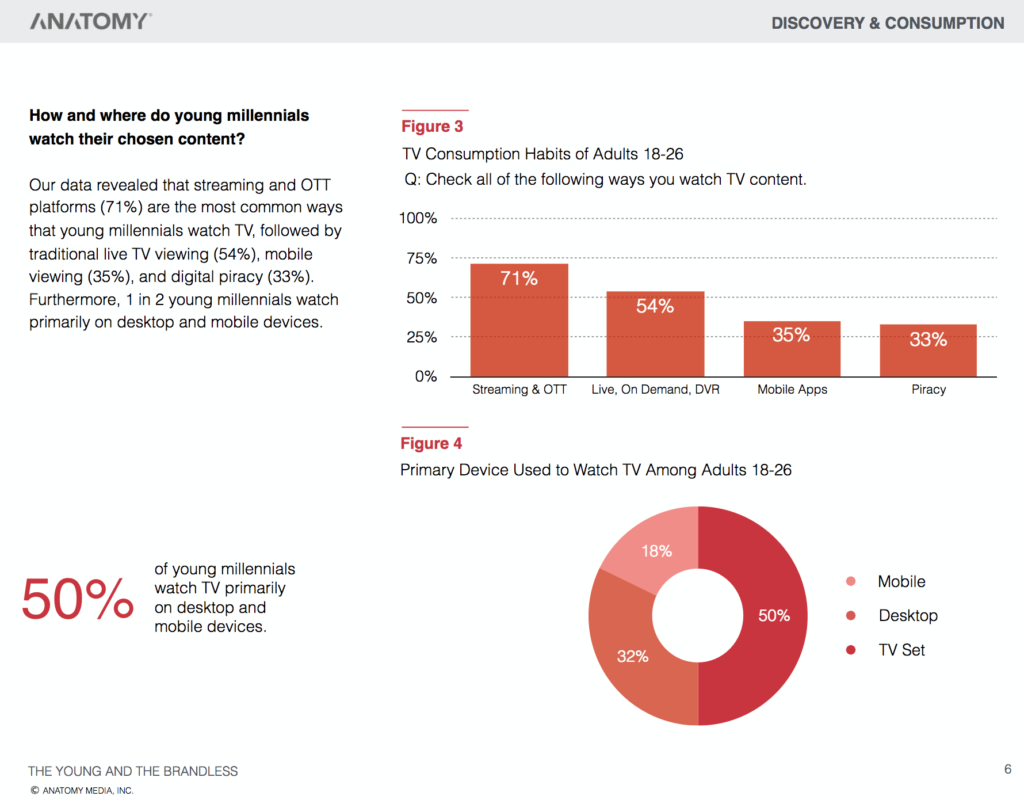

The battle for bandwidth is nothing new. As CE manufacturers push the bounds on display technologies, and with 360 and VR production companies demonstrating ever more creative content, the capacity of networks will be taxed to levels much greater than we see today. For this reason, the Apple announcement at their 2017 Worldwide Developers Conference that they are supporting HEVC in High Sierra (macOS) and iOS 11 is going to be a big deal for the streaming media industry. There is little doubt that we are going to need that big bandwidth reduction that HEVC can deliver.

The battle for bandwidth is nothing new. As CE manufacturers push the bounds on display technologies, and with 360 and VR production companies demonstrating ever more creative content, the capacity of networks will be taxed to levels much greater than we see today. For this reason, the Apple announcement at their 2017 Worldwide Developers Conference that they are supporting HEVC in High Sierra (macOS) and iOS 11 is going to be a big deal for the streaming media industry. There is little doubt that we are going to need that big bandwidth reduction that HEVC can deliver.