Why Video Engineering Teams Are Taking A Video QoE-First Approach To Playback Testing

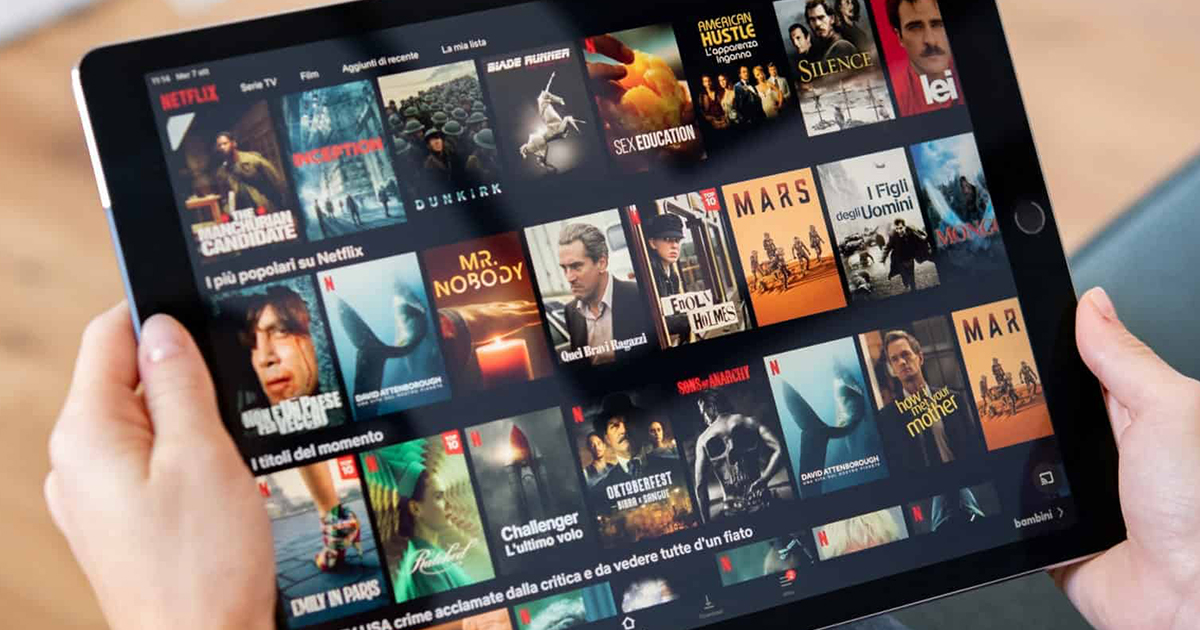

Recently, I wrote about how device fragmentation and testing have become two of the most significant issues facing streaming services, specifically in ensuring audiences have access to high-quality playback that provides the best viewer experience on every device. Frustrated users are more than happy to give feedback on poor experiences through app ratings, social media, and other forums, influencing how future potential customers view streaming services. This matters as quality of experience has become one of the deciding factors in user retention, and difficulty in providing it can seriously affect growth and adoption.

At the root of ensuring quality is the Q/A testing done by the engineering teams, which generally happens through manual and automated methods. As I mentioned in my other post, both come at a high cost in terms of time and budget and require a high level of streaming workflow knowledge to identify and create the tests for every relevant use case. In this post, I will briefly touch on some of the current options on the market, their limitations, and how providers are jumping into the testing mix.

I often get asked, what tools are streaming Q/A teams currently using? Many Q/A teams start with a small list of manual tests being tracked in a spreadsheet or internal wiki, but this approach doesn’t scale and leaves too much room for error as the number of test cases grows. With the complexity around playback, that is just not an option for most, if not all, streaming providers. This isn’t the case for automated testing, as multiple open-source frameworks and vendors offer services covering browsers and different devices.

When it comes to free open source testing frameworks, some of the names I hear most often are Selenium, Cypress and Playwright and on the SaaS platforms side, Browserstack, LambdaTest and AWS Device Farm. Some of these platforms are excellent at providing or enabling streaming services to build testing structures for website and application performance, but they miss the mark when it comes to video. If you are focused on streaming media, these options aren’t dedicated to testing streaming playback and won’t always cover every device you need to support. This is important because even with access to automated testing frameworks, development team will need to identify and take the time to build up most of the use cases to fit their needs.

Additionally, even though these options are good at what they do, they either come barebones (no pre-set test cases) or have general use cases for multiple industries that can be implemented across testing structures. The significant limitations with general purpose frameworks become apparent when engineering teams need to get more granular and focus on performance, functionality, and playback quality. The heavy lifting will still be up to them.

Some vendors focusing on OTT and streaming are Suitest, Eurofins, Applause, and Bitmovin. The first three do it well with certain limitations to the automation control, not being self-service and needing to buy dedicated test devices or focused on guaranteeing the quality of experience while on applications. Bitmovin is the latest to join this group, known in the industry for their encoding, player, analytics capabilities, and streaming workflow expertise. Bitmovin added playback testing to the mix back in April by making their extensive internal playback quality and performance test automation publicly available, creating a unique client-facing solution.

Just before the NAB Show in April, Bitmovin released their latest Player feature, Stream Lab, which is currently in beta and open for anyone to test. I got the chance to see it firsthand at the show and learn more about this ambitious attempt to address the issue of device fragmentation. With their expertise in streaming and investment in building their own internal automated testing solution, stepping into playback testing made sense.

As their solutions are an essential part in a range of end-to-end video workflows, they have a full view of how the different pieces interact with each other and have developed over 1000 use cases they currently test on their Player. They have also built up their testing center with multiple generations of real devices, which you can see from my LinkedIn post when Stream Lab was first announced. That is where it is advantageous, as it is the first automated Playback testing solution that provides access to pre-generated use cases built for the streaming community to test on major browsers and physical devices such as Samsung Tizen and LG Smart TVs. This makes it possible for teams to ensure high-quality playback for streams, with no Q/A or development experience necessary, giving you confidence and peace of mind around version updates or even potentially supporting new devices.

Even though Stream Lab is innovative in its mission when it comes to being a solution for device fragmentation, it is currently available in open beta as Bitmovin looks to add more functionality and use cases. The company started developing their own internal automated infrastructure about five years ago and from that moment, they’ve been adding use cases and functionality from workflows they’ve implemented ever since. Stream Lab still has a good amount to work on and add, but it stands to be a significant plus to development teams in the streaming sector and will be an essential piece for Bitmovin’s playback services.

How the industry tackles device fragmentation will be interesting to continue to analyze. It’s definitely something to pay attention to, as device makers show no signs of slowing down and creating a standard. Due to this, video engineering teams will struggle to cover every use case viewers might experience during playback, especially as new AVOD services from Netflix and Disney come to the market.