PacketZoom Looking To Solve TCP Slow Start By Boosting Mobile App Performance

TCP is a protocol meant for stable networks, and all too often, mobile is anything but stable, with unreliable and often bottlenecked connections that conflict with TCP methodologies like slow starts on transfers. But while TCP looks inevitable from some angles, PacketZoom, an in-app technology that boosts mobile app performance, says outperforming TCP is actually not as difficult as it looks. The problem is certainly complex, when many moving pieces are involved, especially when we’re dealing with the last-mile. But if you break the problem down into digestible chunks and apply observations from the real world, improvement becomes achievable.

The internet is made up of links of different capacities, connected by routers. The goal is to achieve maximum possible throughput in this system. However, the links all possess different capacities, and we don’t know which will present bottlenecks to bandwidth ahead of time. PacketZoom utilizes a freeway analogy to describe the system. Imagine we have to move trucks from a start to end point. At the start point, the freeway has six lanes — but at the end, it has only two lanes. When the trucks are received at the end, an “acknowledgement” is sent back. Each acknowledgement improves the knowledge of road conditions, allowing trucks to be sent more efficiently.

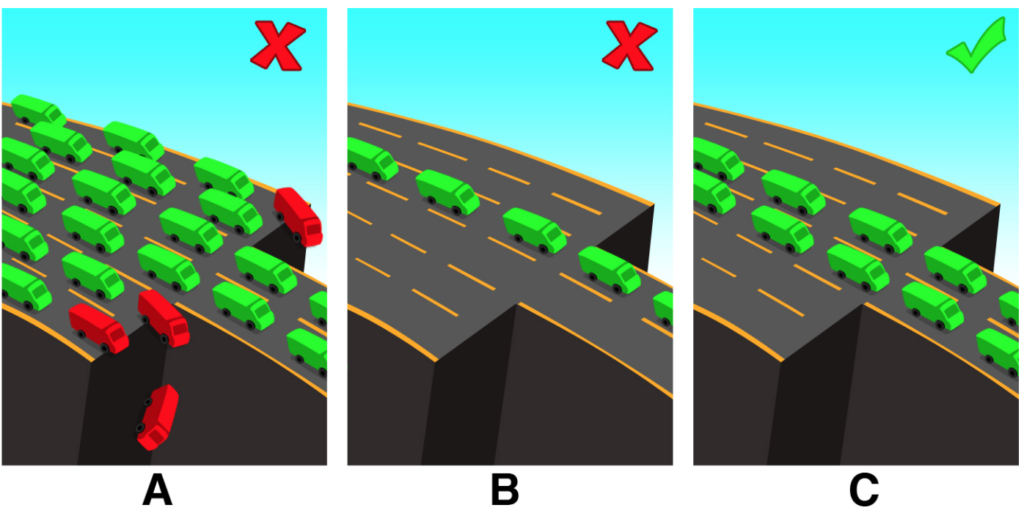

The goal is for the maximum number of trucks to reach the destination as fast as possible. You could, of course, send six lanes worth of trucks to start, but if the four extra lanes suddenly end, the extra trucks must be taken off the road and resent. Given the unknown road conditions at the start of the journey, TCP Shipping, Inc. sensibly starts out with just one lane worth of trucks. This is the “slow start” phase of TCP, and it’s one of the protocol’s unsolved problems in mobile. TCP will start with the same amount of data for all kinds of channels, and thus often gets into the situation of an initial sending rate that’s far smaller than the available bandwidth, or, if it we were to try to start off with too much data, the rate would be larger than available bandwidth and require resending.

However, PacketZoom says it is possible to be smarter by choosing the initial estimate based on channel properties discernible from the mobile context. In other words, we could start off with the ideal 2 lanes of trucks, shown below in frame C.

Of course, it’s impossible to have perfect knowledge of the networks ahead of time but it is possible to get a much better estimate based on prior knowledge of network conditions. If estimates are close to correct, the TCP slow start problem can be enormously improvd upon. The contention that PacketZoom makes is not that TCP has never improved in any use case. Traditionally, TCP was set to 3 MSS (maximum segment size, the MTU of the path between two endpoints). As networks improved, this was set to 10 MSS; then Google’s Quick UDP Internet Connections protocol, in use in the Chrome browser, raised it to 32 MSS.

Of course, it’s impossible to have perfect knowledge of the networks ahead of time but it is possible to get a much better estimate based on prior knowledge of network conditions. If estimates are close to correct, the TCP slow start problem can be enormously improvd upon. The contention that PacketZoom makes is not that TCP has never improved in any use case. Traditionally, TCP was set to 3 MSS (maximum segment size, the MTU of the path between two endpoints). As networks improved, this was set to 10 MSS; then Google’s Quick UDP Internet Connections protocol, in use in the Chrome browser, raised it to 32 MSS.

But mobile has largely been passed by, because traffic run through a browser is the minority on mobile. A larger fixed channel, like QUIC, is also not a solution, given the vast range of conditions between a 2G network in India and an ultra-fast wifi connection at Google’s Mountain View campus. That’s why today, a very common case is mobile devices accessing content via wireless networks where the bandwidth bottleneck is the last mobile mile. And that path has very specific characteristics based on the type of network, carrier and location. For instance, 3G/T-Mobile in New York would behave differently than LTE/AT&T in San Francisco.

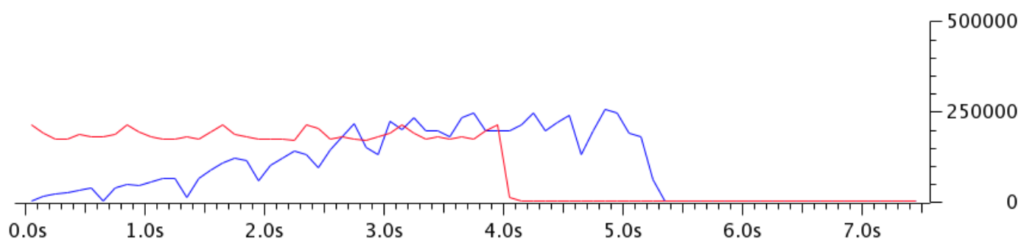

From the real world data Packetzoom has collected, the company has observed initial values ranging from 3 MSS to over 100 MSS for different network conditions. Historical knowledge of these conditions is what allows them to avoid slow starts, to instead have a head start. Crunching a giant stream of performance data for worldwide networks to constantly update bandwidth estimates is not a trivial problem. But it’s not an intractable problem either, given the computing power available to us today. In a typical scenario, if a connection starts with a good estimate and performs a couple of round trips across the network, it can very quickly find an accurate estimate of available bandwidth. Consider the following wireshark graph, which shows how quickly a 4MB file was transferred with no slow start (red line) versus TCP slow start (blue line).

TCP started with a very low value and took 3 seconds to fully utilize the bandwidth. In the controlled experiment shown by the red line, full bandwidth was in use nearly from the start. The blue line also shows some pretty aggressive backoff that’s typical of TCP. In cricket, they often say that you need a good start to pace your innings well, and to strike on the loose deliveries to win matches. PacketZoom says that in their case, the initial knowledge of bottlenecks gets the good start. And beyond the start, there’s even more that they can do to “pace” the traffic and look for “loose deliveries.” The payoff to these changes would be a truly big win: a huge increase in the efficiency, and speed, of mobile data transfer.

TCP started with a very low value and took 3 seconds to fully utilize the bandwidth. In the controlled experiment shown by the red line, full bandwidth was in use nearly from the start. The blue line also shows some pretty aggressive backoff that’s typical of TCP. In cricket, they often say that you need a good start to pace your innings well, and to strike on the loose deliveries to win matches. PacketZoom says that in their case, the initial knowledge of bottlenecks gets the good start. And beyond the start, there’s even more that they can do to “pace” the traffic and look for “loose deliveries.” The payoff to these changes would be a truly big win: a huge increase in the efficiency, and speed, of mobile data transfer.

Mobile app performance is a facinitating topic as now, more than ever, we’re all consuming more content via apps over mobile networks, as opposed to using the browser. I’d be interested to hear from others on how they think the bottlenecks can be solved over the mobile last-mile.