How To Implement A QoS Video Strategy: Addressing The Challenges

While the term “quality” has been used in the online video industry for twenty years now, in most cases, the word isn’t defined with any real data and methodology behind it. All content owners are quick to say how good their OTT offering is, but most don’t have any real metrics to know how good or bad it truly is. Some of the big OTT services like Netflix and Amazon have built their own platforms and technology to measure QoS, but the typical OTT provider needs to use a third-party provider.

I’ve spent a lot of time over the past few months looking at solutions from Conviva, NPAW, Touchstream, Hola, Adobe, VMC, Interferex and others. It seems every quarter there is a new QoS vendor entering the market and while choice is good for customers, more choices also means more confusion on the best way to measure quality. I’ve talked to all the vendors and many content owners about QoE and there are a lot of challenges when it comes to implementing a QoS video strategy. Here’s some guidelines OTT providers can follow.

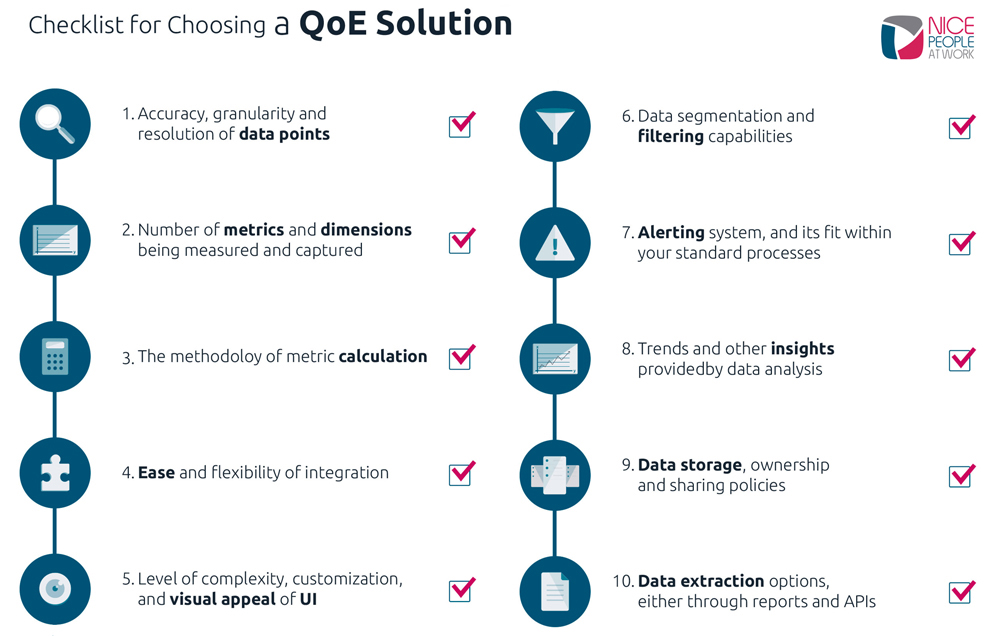

One of the major challenges in deploying QoS is the impact that the collection beacon itself has on the player and the user experience. These scripts can be built by the content owner, but the time and resources it takes to not only build them for their ecosystem of platforms, but develop dashboards, create metrics and analyze the data is highly resource intensive and time consuming. Most choose to go with a third-party vendor who specifically offers this technology, however choosing the right vendor can be another pain point. There are many things to consider when choosing a vendor but in regards to implementation, content owners should look at the efficiency of their proposed integration process (for example, having standardized scripts for the platforms/devices/players you are using and the average time it takes to integrate) and their ability to adapt to your development schedule. [Diane Strutner from Nice People At Work (NPAW) had a good checklist on choosing a QoE solution from one of her presentations, which I have included with permission below.]

Another thing to consider is the technology behind the beacon itself. The heavier the weight of the plug-in the longer the player load time will be. There are two types of beacons, ones that process the data on the client (player-side) which tend to be heavier or the ones that push information back to a server to be processed, which tend to be lighter.

Another thing to consider is the technology behind the beacon itself. The heavier the weight of the plug-in the longer the player load time will be. There are two types of beacons, ones that process the data on the client (player-side) which tend to be heavier or the ones that push information back to a server to be processed, which tend to be lighter.

One of the biggest, if not the biggest, challenge to implement QoS is that it forces content owners to accept a harsh reality: that their services do not always work as they should all the time. It can reveal that the CDN, or DRM or ad server or player provider that the content owner is using is not doing their job correctly. So the next logical question to ask is what impact does this have? And the answer is, that you won’t know (you can’t know) until you have the data. You have to gather this data through properly implementing a QoS monitoring, alerting and analysis platform and insights gathered from it and then apply it into your online video strategy.

When it comes to collecting metrics, there are some metrics that matter most to ensure broadcast quality video looks good. The most important are buffer ratio (amount of time in buffer divided by playtime), join time (or time to first frame of the video delivered), and bitrate (the capacity of bits per second that can be delivered over a network). Buffer and join time have elements of the delivery process that can be controlled by the content owner, and others that cannot. For example, are you choosing a CDN who had POPs close to your customer base, had consistent and sufficient throughput to deliver the volume of streams being requested, and peers well with the ISP your customer base is using? Other elements like the bitrate are not something that a content owner can control, but should influence your delivery strategy, particularly when it comes to encoding.

For example, if you are encoding in bitrates that are HD but your user base streams at low bitrates, this will cause quality metrics like buffer ratio and join time to increase. One thing to remember is that you can’t look to just one metric to understand the experience of your user base. Looking at one metric alone can lead to misinformation. These metrics are all interconnected, and you must have the full scope of data in order to get the full picture needed to really understand your QoS and the impact it has on your user experience.

Content owners routinely ask how they can ensure a great QoE when there are so many variables (i.e., user bandwidth, user compute environment, access network congestion, etc.)? They also want to know once they data is collected, what industry benchmarking should they use to compare their data to? The important thing to remember is that such benchmarks can never be seen as anything more than a starting block. If everything you do is “wrong” (streaming from CDNs with POPs half way across the world from your audience base, encoding in only a few bitrates, and other industry mistakes) and your customer base and engagement grow (and earn more on ad serve and/or grow retention) then, who cares? And let’s say you do everything “right” (streaming from CDNs with the best POP map, and encoding in a vast array of bitrates) and yet your customers leave (and the connected subscription and ad serve revenue drops) then, who cares either?

When it comes to QoS metrics, the same logic applies. So what do content owners focus on? The metrics (or combination of them) that are impacting your user base the most. How do content owners identify what these are? They need data to start. For one it could be join time. For their competitor it could be buffer ratio. Do users care that one content owner paid more for CDN, or has a lower buffer ratio that their competitor? Sadly, no. The truth behind what matters to the business of content owners (as it relates what technologies to use, or what metrics to paramount) in their own numbers. And that truth may (will) change as your user base changes, viewing habits and consumption patterns change, and your consumer and vendor technologies evolve. And content owners must have a system that provides continual monitoring to detect these changes at both a high level and granular level.

There has already been widespread adoption for online video, but the contexts in which we use video, and the volume of video content that we’ll stream per capita has a lot of runway. As Diane Strutner from Nice People At Work correctly pointed out, “The keys to the video kingdom go back to the two golden rules of following the money and doing what works. Content owners will need more data to know how, when, where and why their content is being streamed. These changes are incremental and a proper platform to analyze this data can detect these changes for you“. The technology used will need to evolve to improve consistency, and the cost structure associated with streaming video will need to continually adapt to fit greater economies of scale, which is what the industry as a whole is working towards.

And most importantly, the complexity that is currently involved with streaming online video content, will need to decrease. And I think there is a rather simple fix for this: vendors in our space must learn to work together and put customers (the content owner, and their customers, the end-users) first. In this sense, as members of the streaming video industry, we are masters of our own fate. That’s one of the reasons why last week, the Streaming Video Alliance and the Consumer Technology Association announced a partnership to establish and advance guidelines for streaming media Quality of Experience.

Additionally, based on the culmination of ongoing parallel efforts from the Alliance and CTA, the CTA R4 Video Systems Committee has formally established the QoE working group, WG 20, to bring visibility and accuracy to OTT video metrics, enabling OTT publishers to deliver improved QoE for their direct to consumer services. If you want more details on what the SVA is doing, reach out to the SVA’s Executive Director Jason.